OCR & Coda AI

Extract info from your pdf files with smart prompts

Recently I explained how to use the OCR pack and at the end I gave some examples on how to benefit from it. The focus of this blog is to show my set up for the prompts. These prompts can easily be manipulated, adapted and even replaced.

The code snippet we use you see below.

The format function

The format function has two parts. First the template and second the variables. The template is a string. A string is a combination of characters — letters, numbers, symbols, punctuation and possible spaces in a particular order. In Coda the value type ‘Text’ represents a string. In this string we have place holders and they look like {1} as you can see below.

The numbers in the place holders refer to the variables in the second part. Number one goes to the first variable, number 2 to the second and so on. You can start your text with {3} and this means that you start with variable 3. The numbers correspond to the position of the variable. The string is separated from the variables by a comma and variables are separated by a comma.

Below how the function normally looks like. The double quotes you do not see back in the function I presented are necessary in the standard function to force the data in between the double quotes into a text format. Feel free to use single quotes by the way.

Format("{1} {2} {3}", var01, var02, var03)In our scenario we have already text and therefor you do not see them.

Now you understand the logic of the format function and you can see that {1} in my example refers to the output generated by the OCR pack.

The prompt

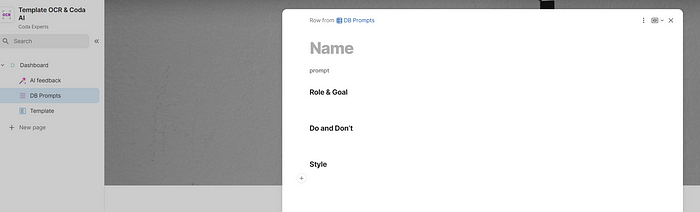

The prompt I reference lives in a prompt table and I made them easy to find by starting with ai as you can see below.

The structure of a prompt

Last year I wrote how to structure a prompt and I believe this suggestion is still useful. Even when the AIs get smarter and stronger, it helps to structure your own thoughts and increases chances for a better output. Below a logic I often apply.

- Role & Goal

- Do & don’t

- Style

In the prompt table we inject this schema and the fill out the details. The placeholder {1} references the output generated by the OCR pack. That is the only technical thing we need to keep in mind.

Below you see how I templated this set up when I create a new prompt

Format String — text

In the second part of the format function we have the variable, which is output. Makers are used to this set up. The first part is however a bit different. It is the cell in the prompt table. We get there by going to:

- custom prompt

- click on “ = “ to open the formula editor

- type @ ai and we get the prompt row

- chain this row with

.prompt

Following these instructions you have your prompt living outside the AI column. This is useful for multiple reasons and one of them is that you easily can update the prompt and you can use template via value for news as shown in the image above.

AI limits

Í counted the words in the text and this one has a bit over 15.000 words and that is too much for now. The applied GPT-4 doesn’t have a strict word limit but instead uses a token system. A token is roughly equivalent to 4 characters or 0.75 words. GPT-4 can handle 8,192 tokens, roughly translating to 6,144 words. However, this includes both your input and the model’s output. The longer the prompt, the less room for the text you want to question.

Anyway, this AI limitation will fade out over time.

coda-brain

Coda AI is a work assistant and like any assistant it has its limits. These limits are far less in the Coda-brain concept, more about it here and have a look at the video.

Coda-brain can easily manage text with 15K words and more. As you can see, when you want to do more with Coda, the coda-brain concept may be for you.

The doc showcasing the applied logic:

Other AI driven use case

In this blog I wrote about the application of AI in the context of travel, at that time we had GPT3.5, meanwhile Coda upgraded to 4.0 and thus the outcomes will be far better.

I hope you enjoyed this article. If you have questions feel free to reach out. My name is Christiaan and blog about Coda. Though this article is for free, my work (including advice) won’t be, but there is always room for a chat to see what can be done. You find my (for free) contributions in the Coda Community and on Twitter. the Coda Community provides great insights for free once you add a sample doc.

All the AI features we are starting to see appear — lower prices, higher speeds, multimodal capability, voice, large context windows, agentic behavior — are about making AI more present and more naturally connected to human systems and processes. If an AI that seems to reason like a human being can see and interact and plan like a human being, then it can have influence in the human world. This is where AI labs are leading us: to a near future of AI as coworker, friend, and ubiquitous presence. I don’t think anyone, including OpenAI, has a full sense of all of the implications of this shift, and what it will mean for all of us